Retention Policy

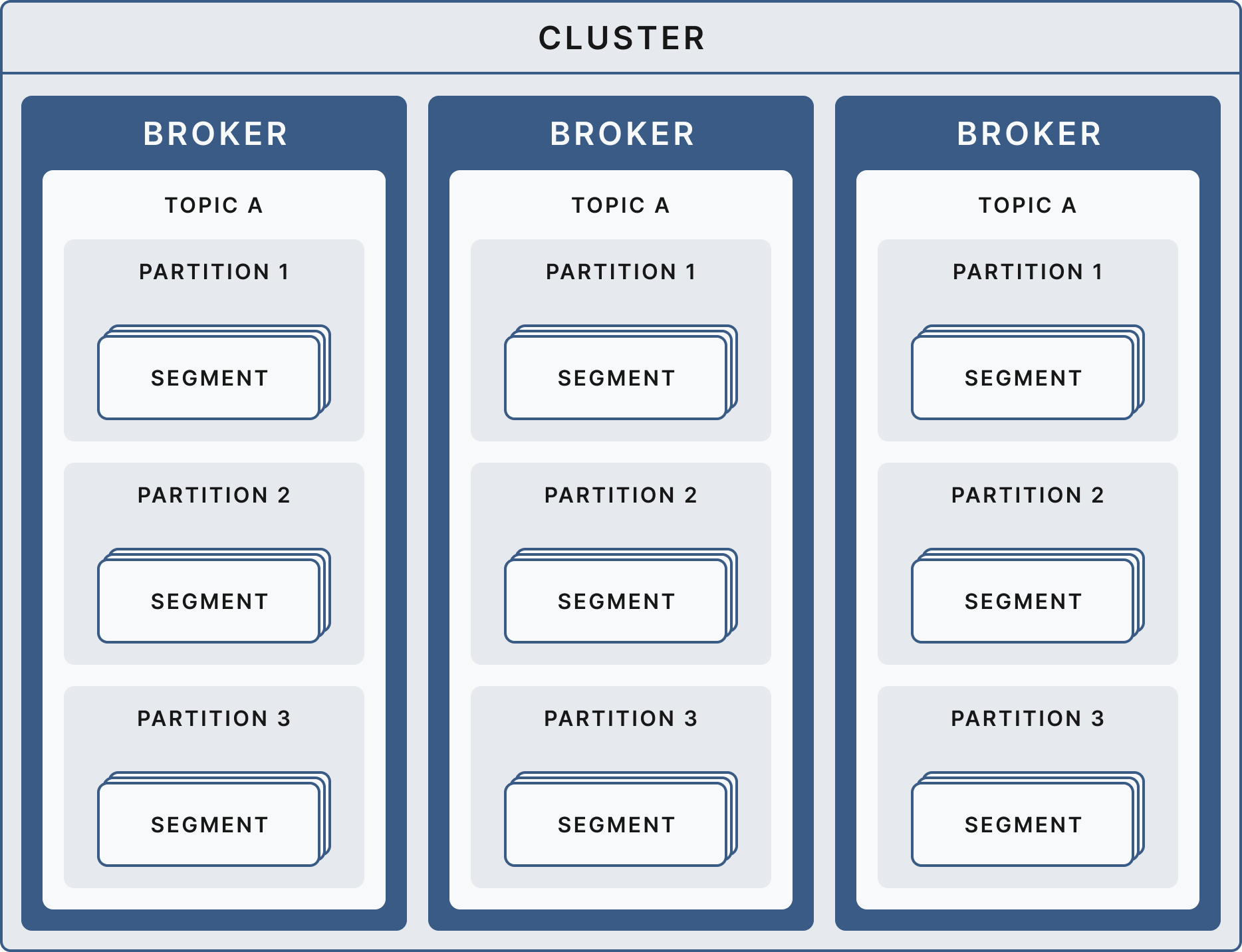

Kafka has topics on a cluster level, which are divided into partitions spread among the brokers. Each partition's data is written into different segments. A segment is represented by a single data file on the server where the events are actually written. Once a segment is full, a new one is opened and the prior one is considered inactive.

Configurations

log.segment.bytes The max size of a single segment. Defaults to 1 GB

log.segment.ms The time a segment can be opened before opening a new active one, even though the log.segment.bytes might not yet be reached.

log.retention.(ms/minutes/hours) The amount of time a log can be stored before Kafka marks the partition for deletion. The default value is one week.

log.retention.bytes The size of a partition can be before Kafka marks the partition for deletion. The default value is -1 (no retention).

cleanup.policy The cleanup policy determines what will happen to logs that have met the requirements of the previously set retention policies. There are three options:

- Delete (default) - Once the retention limits are met, the segments are completely removed.

- Compact - Instead of deleting the segments, the logs are compacted to only save the last entry for each key in the segment.

- Delete & Compact - Both enable log compaction but still respect the retention policies and delete the logs.