Efficiently managing disk space in your Apache Kafka Cluster is a fundamental aspect of maintaining performance and stability. This blog post will guide you through the details and pitfalls of topic-leveled log retention configuration and effective cleanup policies in Apache Kafka.

Compared to many other message brokers, Apache Kafka is designed to store events in its partitions for a period of time instead of them being deleted once consumed by a client. This is, in some ways, what makes Apache Kafka powerful since it enables replayability. For this reason, Kafka is often referred to as a storage engine rather than a traditional message queue. Depending on the use case, systems can be configured to store events forever or keep them for a shorter period.

Configuring topic-level log retention

When deciding how long to store events, it is important to consider the amount of disk space needed. Enough disk space is critical for Kafka to run properly, but immediate storage can quickly cause disk space to grow and put a heavy load on the system. Fortunately, this can be managed by configuring log retention.

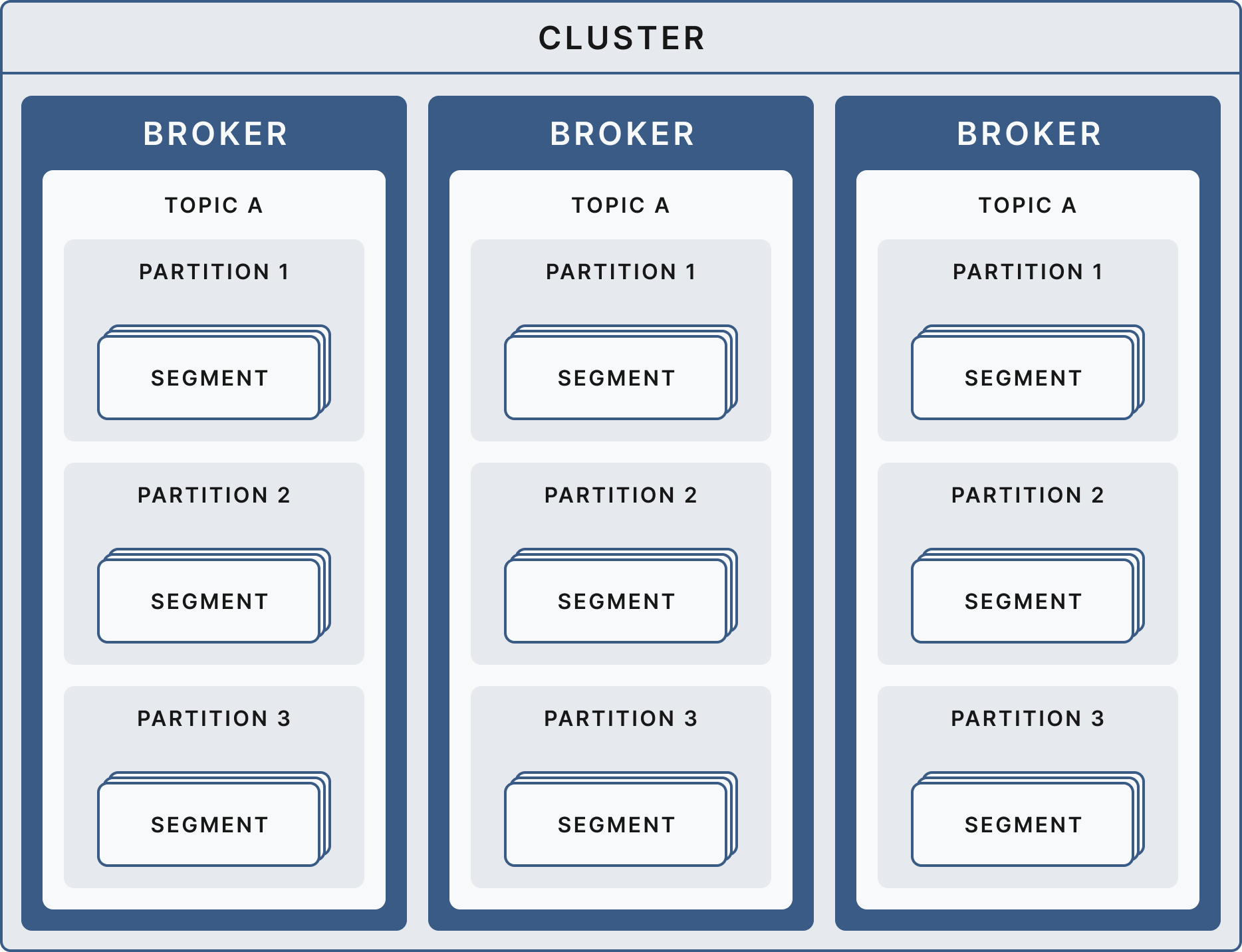

It is important to know the concept of segments when working with Kafka retention. Kafka has topics on a cluster level, which are divided into partitions spread among the brokers. Finally, each partition's data is written into different segments. A segment is represented by a single data file on the server, where the events are actually written. Once a segment is full, a new one is opened, and the prior one is considered inactive.

Apache Kafka - Log Retention Configurations

Here are four retention configurations to keep track of:

log.segment.bytes The max size of a single segment. Defaults to 1 GB.

log.segment.ms The amount of time after which a log segment should be closed, even though log.segment.bytes have yet to be reached. A log segment can be considered for expiration (retention) only after it has been closed.

log.retention.(ms/minutes/hours) The amount of time a log can be stored before Kafka marks the partition for deletion. The default value is one week.

log.retention.bytes The size a partition can reach before Kafka marks the partition for deletion. The default value is -1 (no retention).

Apache Kafka Cleanup Policy

The cleanup policy determines what will happen to logs that have met the requirements of the previously set retention policies. There are three options:

cleanup.policy

- Delete (default) - The segments are completely removed once the retention limits are met.

- Compact - Instead of deleting the segments, the logs are compacted to save only the last entry for each key in the segment.

- Delete & Compact - Both enable log compaction but still respect the retention policies and delete the logs.

Minimize the risk of running out of disk space in a Kafka cluster.

Being aware that the configuration options above should minimize the risk of running out of disk space. You should also estimate your disk usage by looking at the number of topics/partitions combined with your retention settings.

However, there are a few pitfalls that we at CloudKarafka have seen customers fall into:

- The retention.bytes configuration is on a partition granularity, not a specific topic. So, if you have a topic with 10 partitions, expect the topic size to be 10x the size of this configuration.

- Using a segment.bytes value larger than retention.bytes can break the retention logic as Kafka only deletes inactive segments. Not filling up a complete segment will keep filling the disk until the time-based retention is reached.

In the same way as the prior one, having a segment lifespan much longer than the time-based retention, the events can stick longer than expected.

All the best,

The CloudKarafka team

About CloudKarafka

CloudKarafka is a trusted hosting provider of Apache Kafka. Provided by 84codes, a Swedish tech company dedicated to simplifying cloud infrastructure for developers. If you have any queries or problems, our support team are on hand 24/7 to help you. Just send an email to support@cloudkarafka.com.