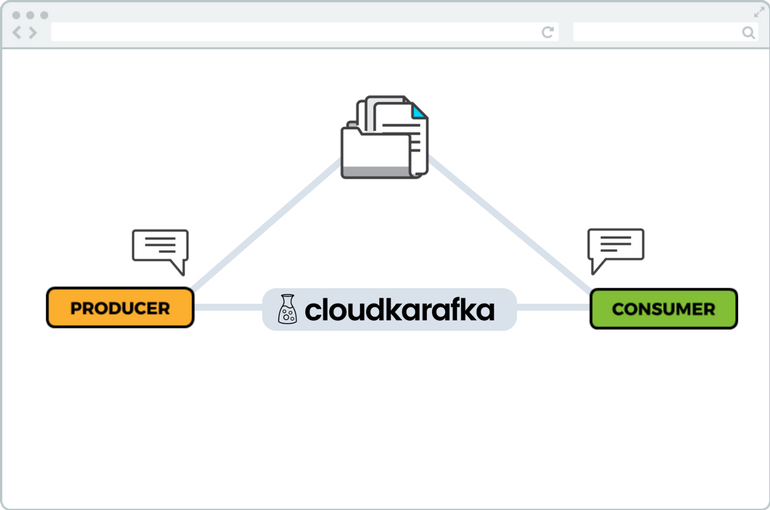

To maintain Kafka's characteristics, but add the possibility to force the Kafka components to communicate in a correct format, we introduce the Kafka Schema Registry integration. Schema Registry acts as a standalone component interacting with both the producer and the consumer and provides a serving layer for your metadata.

In this way, Schema Registry ensures that the amount of possible conflicts between producer and consumer messages, such as bad data or sudden change of formats in messages, are reduced while Kafka's unique character is not affected. Schema registry is a standalone component, which simply makes it possible for the Kafka broker to remain the powerful player in the field of Message Streaming as it is today.

Kafka Schema Registry - why do we need it?

The concept of Schema Registry is simple and provides the missing schema component in Kafka. What makes Kafka unique is the way of sending and receiving raw data between the producer and consumer. Kafka simply takes bytes as input and publish them with no data verification. That way, less CPU is required in the usage of Kafka, and the service can continue to send raw data between producer and consumer at the rate we are used to.

But still, if the data sent between the producer and the consumer does not match, the consumer might get a hard time reading it. This problem may occur when a consumer receives bad data such as sudden change of formats in messages sent between consumer and producer, meaning that all data sent between producer and consumer might not be readable. The reason why you do not build such a function in Kafka simply depends on the fact that you do not want to “overtax” Kafka and thus risk reducing the speed of non-encoded message stream, which is one of Kafka's strengths.

Kafka Schema Registry - How Does It Work?

Schema Registry acts as a standalone component interacting with both the producer and the consumer and provides a serving layer for your metadata.

In these simple steps, we show how Schema Registry works around the problem, create readable data without having to interact with the Kafka broker, and increases the rate of a successful stream of messages between producer and consumer.

- The producer creates a message that contains schema and data.

- The Schema Registry takes the data and serializes it as it keeps a cache of already registered schemas and identifications.

- The consumer, who has its schema (the one it is expecting the message to conform to) receives the payload of data, deserializes it and, based on id, look up the full schema from cache or Schema Registry.

- A compatibility check is performed with three possible outcomes. a) Schemas are matching, instant success! Messages are now delivered to the consumer. b) Schemas are not matching, but the message is compatible, the payload transformation (schema evolution) converts the schema and message are delivered! c) Schemas are not matching, and the message is not compatible, the message fails to deliver.

On top of this, Kafka Schema Registry also supports adding of fields which allows the recipient of the messages to work with an older format.

To start using Kafka Schema Registry on your CloudKarafka Instance, go to the control panel and under the menu Connectors you will find Schema Registry, where you can select which mode to start it on.

Feel free to send us any feedback you might have at support@cloudkarafka.com