Apache Kafka is poised to take a leading role in the Internet of Things.

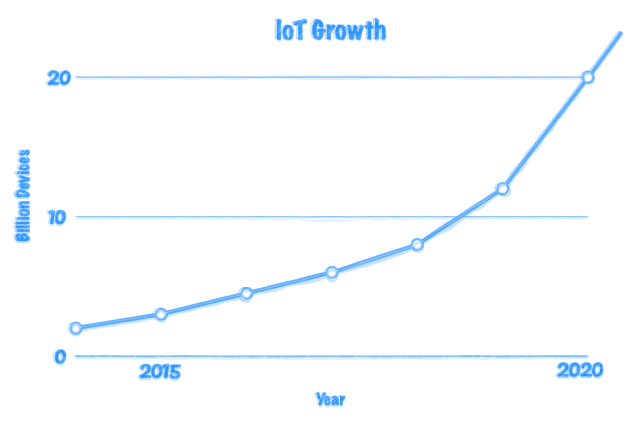

The Internet-of-things (IoT) industry is snowballing at an astonishing rate. Intel estimates that by the year 2020, the total connected devices across the globe will cross the 200 billion mark. Naturally, this unprecedented growth in total devices will be accompanied by an even-bigger growth in data generation. To cope with this exponential increase, special tools have to be developed. Apache Kafka is one such tool, which facilitates in making your big data solution fast and scalable by managing enormous amounts of data ingestion. Though not originally intended for IoT devices, Kafka has established itself as one of the staples of large-scale IoT deployments. In this article, you will see exactly why that is the case.

What is Apache Kafka?

Apache Kafka is a distributed stream processing platform for big data systems. Working similarly to enterprise messaging systems, Kafka stores streams of records, so that developers don’t have to code data pipelines manually for each source-destination pair. Originally developed by LinkedIn, Kafka was designed to take care of data ingestion for Hadoop. Since then, it has evolved to become a more general-purpose data ingestion platform, which could be used by other frameworks. Amongst Kafka’s most prominent features are its scalability (by nature, as it is designed for large data sets), speed (it doesn’t use HTTP for communication) and fault-tolerance. One fairly common use case for Kafka has become real-time analytics, due to the platform’s handling of multiple live data feeds. Other use cases include log aggregation for large organizations and tracking your website’s activity stats.

How can IoT benefit from Apache Kafka?

At this point, you should be starting to piece together the puzzle. At first glance, Kafka may seem to you just a fancy message queue for vast amounts of data. But its utility should start becoming more visible in the IoT space. Inherent to any IoT data platform is its reliance on large data sets. Now that wearables and self-driving cars have been thrown into the mix, we are looking at individual cases where gigabytes worth of data is being generated on any given day. And that’s being conservative. So, before you even think about feeding this data to your Hadoop cluster, robust data processing is in order.

Let’s take a look at a specific example of home automation. A typical smart home comprises of automated security, lighting, home appliances, as well as heating, ventilation and air conditioning (HVAC). Every bit of data generated by the system is organized as sets. These sets correspond to various metrics, like light intensity, light usage, ambient temperature, load consumption etc. As these are mostly system-on-chip IoT devices, they use lightweight protocols for communication like Bluetooth LE (BLE), Z-Wave and ZigBee. Using these protocols, the data is collected and passed on to the data processing pipeline via a centralized gateway. Think of this as a point where all the data from all the sensors converges. This pipeline is responsible for managing and storing data in a fault-tolerant manner, so that the processing layer (Hadoop or Storm) could focus on just that – processing. Kafka sits right in the middle of the centralized gateway and processing layer.

Through Kafka, you can ingest this vast amount of data for both real-time analytics and batch processing. For instance, data pertaining to ambient temperature would be more suitable to follow the real-time route, as you would want immediate adjustments to your heating system based on current readings. In contrast, data corresponding to light usage or power consumption for efficiency analysis may be best used by a MapReduce task, as it requires prolonged monitoring for more accurate results. Whatever the case may be, Kafka can streamline data delivery and maintain the robustness of the data processing pipeline. This is because it is platform-independent. Whether you are using Apache Hadoop, Apache Spark or Apache Storm, Kafka should be spearheading your data processing pipeline.

Hopefully, this somewhat simplified example of IoT working in tandem with Apache Kafka gave you an insight into how large-scale data sets can take advantage of Kafka’s blazing fast performance to get optimal results in real-time.

Kafka vs. MQTT vs. Flume vs. HTTP

Of course, Kafka isn’t the only game in town when it comes to data ingestion. Though, it is important to hash out the differences between Kafka and other similar platforms, because some of these platforms are in fact not direct substitutes for Kafka.

MQTT and Kafka, on paper, are publish/subscribe (pub/sub) messaging platforms. But a key distinction between the two relates to how they are put to use. Unlike Kafka, MQTT is a machine-to-machine (M2M) messaging protocol. This means that while Kafka is responsible for data management and ingestion into computation systems, MQTT specializes in data packet exchange between two physical devices. In our home automation example, if we want to adjust air conditioning according to ambient temperature, the temperature sensor must be able to communicate with the air conditioner. This communication is held through MQTT. As expected, MQTT is only intended for small-sized data sets, where responsiveness and power efficiency are prioritized. This doesn’t lend well to large data sets – the result being bad scaling. Kafka, on the other hand, is designed specifically with massive data feeds in mind. Sound IoT systems use Kafka and MQTT in conjunction, co-existing separately for maximum performance and scalability.

A better comparison to Kafka is Apache Flume. Both perform analogous functionalities, making the comparison fair to some extent. As discussed earlier, while Kafka does work rather well with Hadoop, it is more broadly a general-purpose data ingest platform. Flume, however, is designed solely to work with the Hadoop Distributed File System (HDFS) and HBase. This means that right off the bat, if you have a situation where your data needs to be processed by any other system than Hadoop, your decision has been made for you. But if you are planning on using Hadoop, then the narrow focus of Flume may serve you better. Flume has the capability to process data before it even gets to your compute cluster, which makes pre-processing a breeze. Kafka has no such built-in functionality, forcing developers to code external pre-processing tasks. But where Kafka loses in dedicated support, it makes up in data availability. Events are not replicated in Flume, unlike Kafka. So, if any node (device) in an IoT network goes silent, you are essentially in the dark until the disks have been recovered. You can, at the end of the day, combine Kafka and Flume if you are on Hadoop. This will give you the fault-tolerance and high availability of Kafka, along with the dedicated support for HBase and HDFS provided by Flume.

Finally, let’s look at HTTP and Kafka. Kafka uses a binary protocol with a TCP port, instead of HTTP. Dropping HTTP means that Kafka performs faster and is built to scale. To maintain this level of performance, it is crucial to use native clients instead of HTTP proxies. As Kafka is coded in Java and Scala, your best bet is to use the native Java library. You may also find the librdkafka C/C++ library useful, with binding for many languages including Node.js, Ruby, Python, Rust and Swift.

Conclusion

Apache Kafka should be on the short list of every aspiring IoT developer because its ability to act as the high-performance data-absorbing layer in an IoT infrastructure is unprecedented. It also has vast implications beyond IoT devices; some of the world’s best use it to assist them in various data-centric tasks. Twitter uses Kafka for data ingestion to their Storm cluster, while Netflix uses it for real-time monitoring and event processing. Take a look at other platforms harnessing the power of Kafka here. To find out more about Kafka, check out its various use cases to see how your business can benefit. Ready to jump into the world of Apache Kafka? We recommend using Cloudkarafka, which is the go-to solution for managing Apache Kafka servers.